YouTube Censorship: The Video They Didn't Want You to See!

Audio Brief

Show transcript

This episode explores how YouTube's demonetization policies have become a potent tool for content suppression, significantly impacting serious journalism and public discourse.

There are three key takeaways from this discussion. First, demonetization extends beyond a financial penalty, acting as a critical mechanism for algorithmic content suppression. Second, the system, driven by advertiser brand safety, creates a chilling effect on creators covering complex, important topics. Third, this environment has fostered the rise of "algospeak," directly degrading clarity in online communication.

Demonetization, often signaled by a yellow dollar sign, severely limits a video's discoverability and organic growth on YouTube. It de-prioritizes content in recommendations and search results, effectively shelving it. This process functions as censorship by proxy, discouraging creators from even attempting nuanced discussions on controversial subjects.

The current monetization system originated with the "Adpocalypse," when major advertisers pulled spending after their brands appeared alongside offensive content. In response, YouTube implemented blunt, automated filters, prioritizing brand safety above all else. This system notoriously struggles with context, frequently penalizing serious analysis alongside genuinely harmful content.

To navigate these automated content filters, creators increasingly resort to "algospeak," using coded language to discuss sensitive subjects. This practice dilutes content quality and clarity, making it harder for audiences to engage effectively with important topics. It is a direct consequence of algorithmic censorship, ultimately impacting the integrity of online information.

Ultimately, YouTube's content policies highlight a fundamental tension between brand safety, freedom of expression, and fostering informed public debate.

Episode Overview

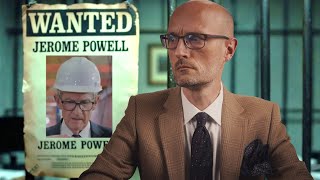

- The speaker, Patrick Boyle, recounts his experience of having a highly successful video on the Epstein files abruptly demonetized and suppressed by YouTube's algorithm.

- He explains that demonetization is more than just a loss of ad revenue; it's a form of "censorship by proxy" that drastically reduces a video's reach and visibility.

- The episode traces the origin of these strict policies back to the "Adpocalypse," where advertiser concerns over brand safety led to the implementation of blunt, automated content filters.

- Boyle argues that this system inadvertently penalizes serious journalism and in-depth analysis on important topics, while often failing to police actual scams and harmful content.

Key Concepts

- Demonetization: The process by which YouTube limits or removes ad revenue from a video deemed unsuitable for most advertisers, often indicated by a yellow dollar sign icon.

- Algorithmic Suppression: Beyond financial impact, demonetization leads to a video being de-prioritized by YouTube's recommendation and search algorithms, effectively "shelving" the content and halting its growth.

- Censorship by Proxy: The concept that demonetization acts as an indirect form of censorship. By creating financial disincentives and reducing distribution, the platform discourages creators from covering controversial but important subjects.

- Brand Safety & The "Adpocalypse": YouTube's monetization policies were drastically tightened after major advertisers pulled their spending in response to their ads appearing alongside offensive content (e.g., Logan Paul's "suicide forest" video). This event, known as the "Adpocalypse," led to the current automated system.

- Algospeak: A coded online dialect where creators use substitute words (e.g., "unalive" instead of "dead") to bypass automated content moderation filters and avoid demonetization, which degrades the quality and clarity of public discourse.

Quotes

- At 00:34 - "Put simply, the demonetization of videos and content on YouTube suppresses that content and drastically affects a user's ability to find the content and negatively impacts a content creator in trying to get their content to an audience." - explaining that demonetization's impact extends far beyond just lost ad revenue.

- At 03:06 - "It was a simple commercial calculation. They worried that appearing next to offensive content like a Logan Paul video might be misconstrued as an endorsement or simply link their brand to something awful in the viewer's mind." - detailing the advertiser mindset that led to the "Adpocalypse" and YouTube's subsequent strict content policies.

- At 07:54 - "This safety filter... notoriously fails to grasp context. The algorithm groups Nick Fuentes and a historian explaining an important World War II battle into the same category, and then both face the same penalty: the revenue cliff." - illustrating how the automated system is a blunt instrument that cannot distinguish between promoting hate and providing historical education.

Takeaways

- Understand that demonetization on YouTube is a powerful tool for content suppression, not just a financial penalty. It directly impacts a video's discoverability and can severely stunt a channel's growth.

- Be aware that the current system, driven by brand safety, creates a chilling effect on serious journalism. It incentivizes creators to avoid nuanced discussions on important but controversial topics in favor of safer, algorithm-friendly content.

- The rise of "algospeak" is a direct consequence of algorithmic censorship. It forces creators to dilute their language to survive, degrading the quality of information and making it harder for audiences to understand serious subjects.